AI Transformation & Implementation

AI Transformation and Implementation

Mavengigs

Mavengigs is a global consulting firm providing consulting services for Mergers & Integrations (M&A) and Transformations. Through our network of independent resources and partners, we serve clients in USA and Europe. Mavengigs is a division of Panvisage Inc. (a holding company with interests in consulting, education, real estate and investments).

This content is a synopsis from multiple sources for easy reference for educational purposes only. We encourage everyone to become familiar with this content, and then reach out to us for project opportunities

AI Transformation

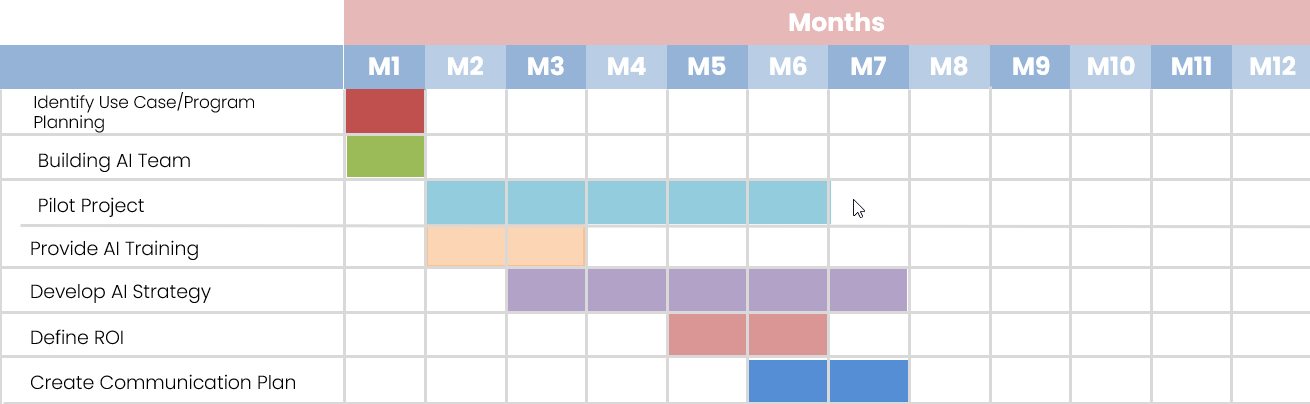

An AI transformation initiative typically spans 2-3 years, yet tangible outcomes can be anticipated within the initial 6-12 months. Embracing such a program ensures a competitive edge and allows your company to harness AI capabilities, thereby driving significant advancement.

Success Keys

Organizations can leverage external firms with both outsourced and in-house technology and talent to methodically execute multiple valuable AI projects, yielding direct business value. Adequate comprehension of AI is essential, accompanied by established processes to methodically identify and choose valuable AI projects for implementation. Strategic alignment is crucial, with the company’s direction broadly aimed at thriving in a future driven by AI technology. Transforming your esteemed company into an exceptional AI-driven entity is challenging but attainable, especially with the assistance of reliable partners.

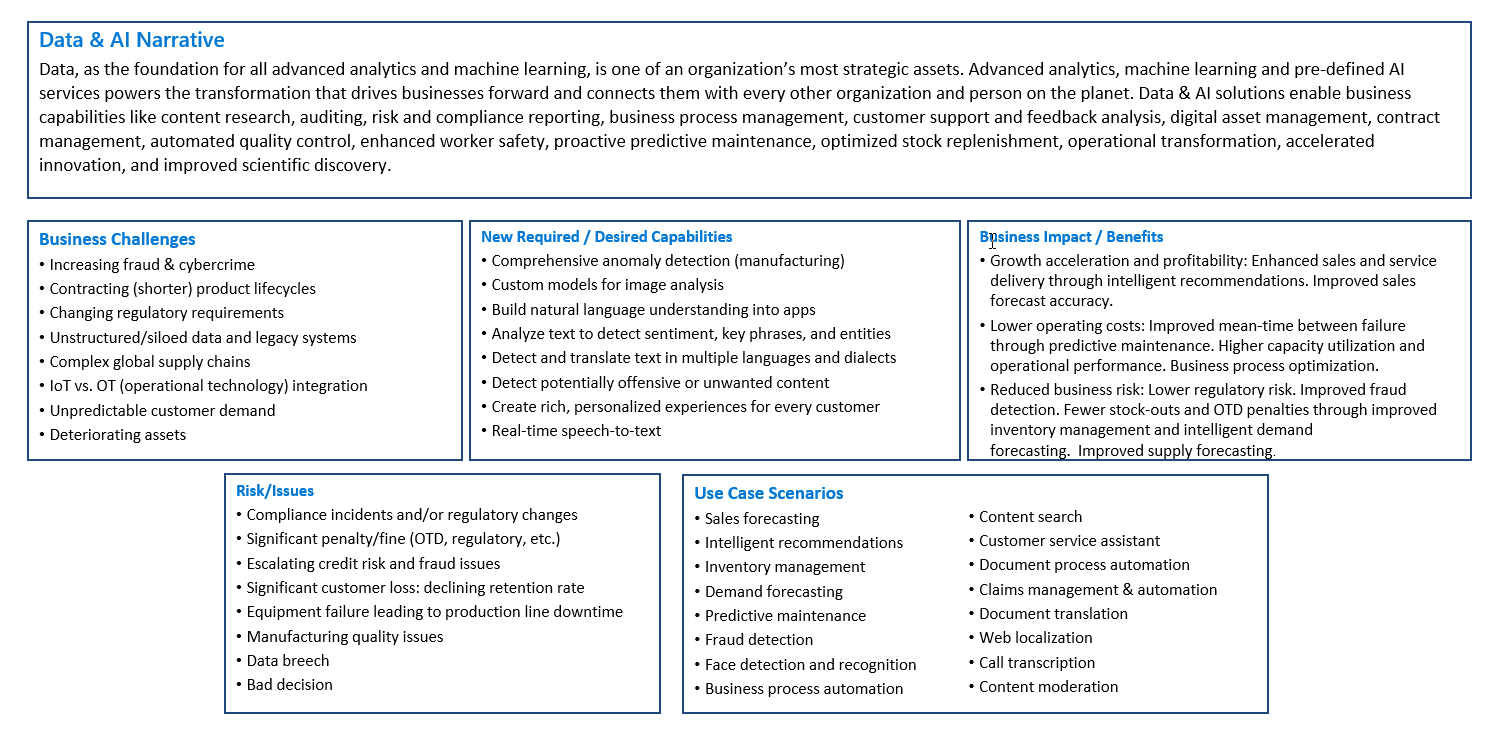

AI Strategy

An artificial intelligence strategy serves as a blueprint for integrating AI into an organization, ensuring alignment with and support for the broader business objectives. A successful AI strategy acts as a roadmap, delineating steps to leverage AI for purposes such as deriving deeper insights from data, improving efficiency, optimizing the supply chain or ecosystem, and enhancing talent and customer experiences, based on the organization’s goals. With artificial intelligence exerting its influence across nearly every industry, the importance of a well-crafted AI strategy cannot be overstated. Such a strategy can enable organizations to unleash their full potential, secure a competitive edge, and attain sustainable success amidst the dynamic landscape of the digital era. A successful AI strategy is indispensable, serving as a cornerstone for achieving business objectives, facilitating prioritization, optimizing talent and technology selections, and ensuring the seamless integration of AI to bolster organizational success.

AI Strategy Building Block

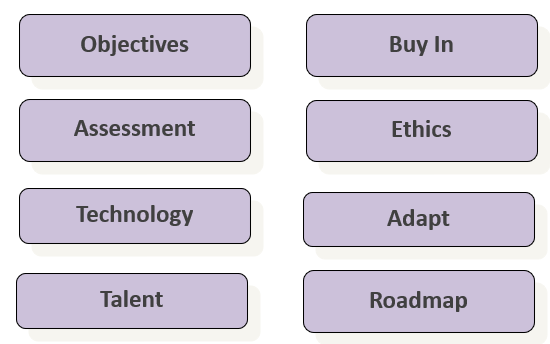

Objectives: Identify the specific problems that the organization needs to solve and the metrics that require improvement. Select business objectives that are crucial for the organization’s success and have a track record of being successfully addressed by AI (external Use Cases). This approach ensures that AI initiatives are aligned with the organization’s strategic goals and have a tangible impact on key performance indicators.

Assessment: Understanding the organization, including its priorities and existing capabilities. Assess the size and strength of the IT department responsible for implementing and managing AI systems. Conduct interviews with department heads to identify potential issues that AI could help solve. This comprehensive approach ensures alignment between AI initiatives and the organization’s strategic goals while also identifying potential challenges and opportunities for implementation.

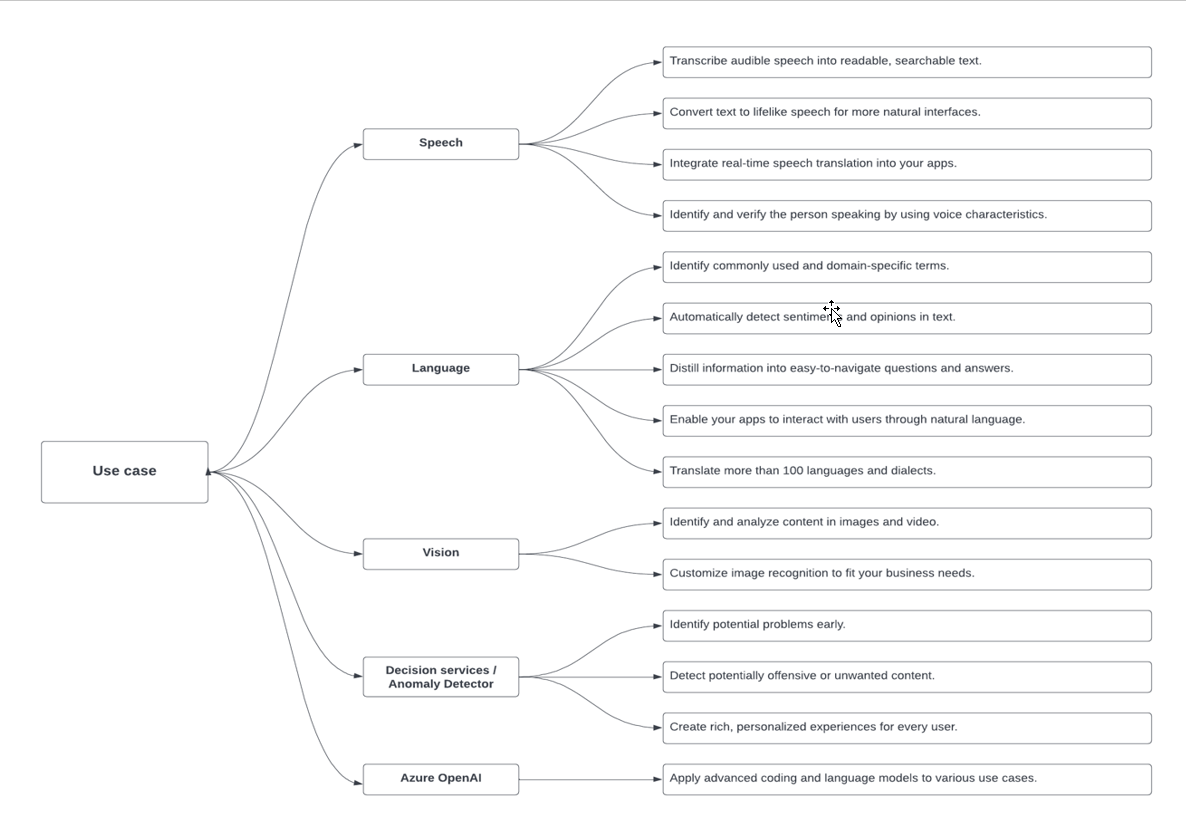

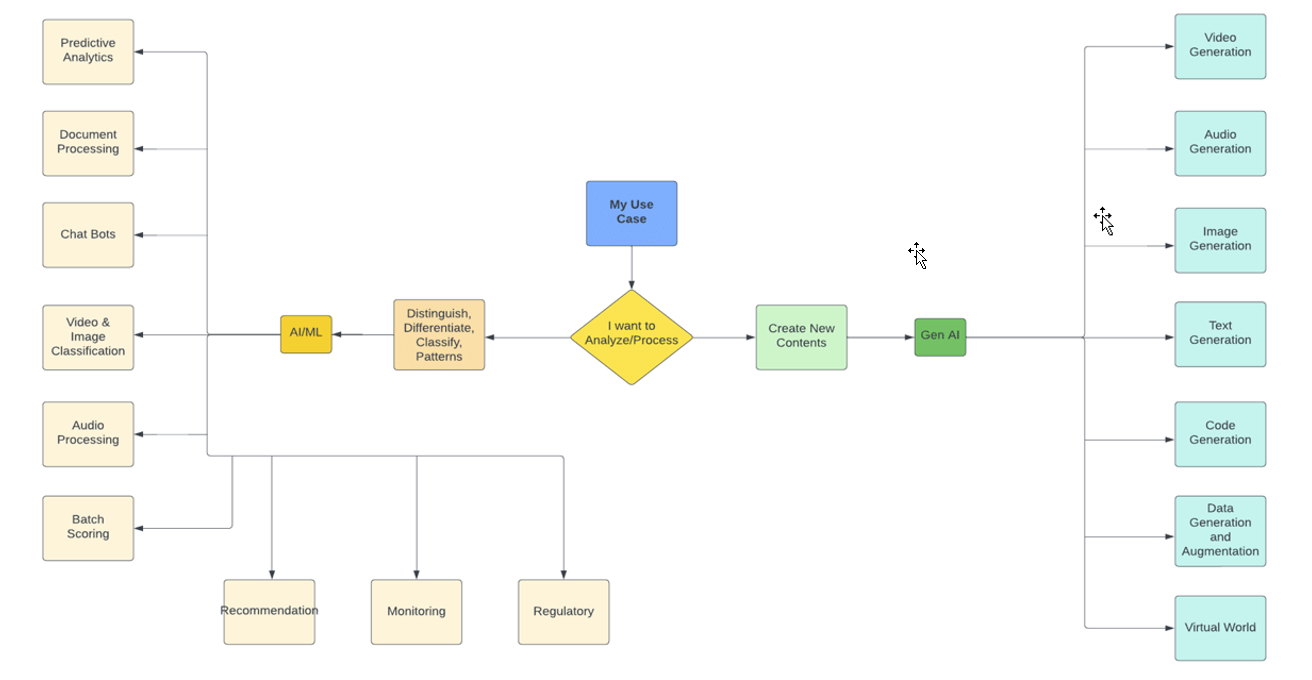

Technology: It’s crucial to gain an understanding of various AI technologies, generative AI, machine learning (ML), natural language processing (NLP), computer vision, among others. Researching AI use cases provides insight into where and how these technologies are applied across relevant industries. Identify tools, vendors, platform, methodology and deployment. Identifying issues that AI can address and the benefits it offers is essential. These may include enhancing efficiency, automating repetitive tasks, improving decision-making accuracy, personalizing customer experiences, and optimizing resource allocation, among others. Note any specific methods of implementation, and any potential roadblocks encountered during deployment. This holistic understanding ensures a comprehensive approach to integrating AI effectively within the organization.

Talent: Initiate upskilling programs with the necessary AI experts. Encourage teams to stay abreast of cutting-edge AI advancements and explore innovative problem-solving methods. This approach ensures teams are equipped with the latest knowledge and skills to drive successful AI initiatives.

Buy In: Present the AI strategy to stakeholders, ensuring alignment with business objectives. Gain buy-in for the proposed roadmap by clearly communicating the benefits, costs, and expected results. Secure the necessary budget to implement the strategy by highlighting the potential return on investment and the strategic importance of AI adoption for the organization’s success. This approach ensures that stakeholders understand the value proposition of the AI strategy and are committed to its successful implementation.

Ethics /Governance: Ensure a thorough understanding of the ethical implications associated with the organization’s responsible use of AI. Establish ethical guidelines that commit to ethical AI initiatives, inclusive governance models, and actionable guidelines. Regularly monitor AI models for potential biases and implement

Adapt: Define change management process to keep up with the fast-paced developments of new products and AI technologies. Adapt the organization’s AI strategy based on new insights and emerging opportunities.

Roadmap

Salient features of a roadmap for AI implementation:

- Select Projects: Choose projects based on their alignment with business objectives and their potential to address identified needs effectively.

- Prioritize Early Successes: Identify projects that can deliver quick wins and tangible value to the business. These projects should address practical needs identified within the organization.

- Tools and Support: Assess the tools and support required for each project. Consider factors such as data infrastructure, AI algorithms, computing resources, and expertise. Organize these based on their importance and relevance to the project’s success.

- Data Infrastructure: Defining Data Topology and access to relevant data for training and testing AI models.

- AI Algorithms: Select appropriate machine learning or deep learning algorithms based on the project requirements.

- Computing Resources: Determine the computing resources needed for training and deploying AI models, considering factors like GPU servers or cloud-based services.

- Expertise: Identify the skills and expertise required to execute the project successfully. This may include data scientists, machine learning engineers, domain experts, and project managers.

- Organize Resources: Allocate resources based on project priorities and dependencies. Ensure that the most crucial resources are allocated first to enable project success. By following these steps, organizations can develop a roadmap for AI implementation that focuses on delivering early successes and maximizing business value.

Change Management

To keep up with the fast-paced developments of new products and AI technologies, establish a change management process that enables the organization to adapt its AI strategy effectively:

- Continuous Monitoring and Learning: Implement a system for continuous monitoring of the AI landscape, including new products, technologies, and industry trends. Stay informed about emerging opportunities and potential disruptions in the AI ecosystem.

- Agile Decision-Making: Foster a culture of agility and flexibility within the organization to respond promptly to changes in the AI landscape. Encourage experimentation and rapid iteration to adapt the AI strategy as needed

- Cross-Functional Collaboration: Facilitate collaboration between different departments and teams within the organization to leverage diverse perspectives and expertise in adapting the AI strategy. Encourage open communication and knowledge sharing to facilitate informed decision-making.

- Feedback Mechanisms: Establish feedback mechanisms to gather input from stakeholders, employees, customers, and external partners regarding the effectiveness of the AI strategy and potential areas for improvement. Use this feedback to iteratively refine and enhance the strategy over time.

- Scenario Planning: Conduct scenario planning exercises to anticipate potential future developments in AI technology and assess their potential impact on the organization. Develop contingency plans and mitigation strategies to address potential risks and capitalize on emerging opportunities.

- Executive Oversight: Ensure that senior leadership remains actively engaged in monitoring and adapting the AI strategy. Provide regular updates to executives on the evolving AI landscape and the organization’s response to changes.

AI Organizational Plan

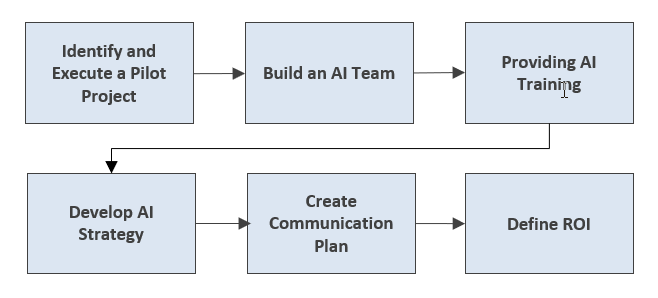

Build AI Team

In addition to leveraging outsourced partners with profound technical AI expertise to expedite initial momentum, it is advisable to establish a centralized in-house AI team. Establish and enhance an AI capability to cater to the entire organization’s needs. Initiate a series of cross-functional projects to aid various divisions or business units with AI initiatives. Upon the completion of these initial projects, establish recurring processes to consistently deliver a stream of valuable AI projects. Establish uniform standards and methodologies while proactively addressing risks and issues to define future processes effectively. Create company-wide platforms that serve the needs of multiple divisions or business units and are unlikely to be developed independently by any single division.

Provide AI Training

Executives, leaders, and stakeholders should possess a fundamental understanding of AI, encompassing basic knowledge of technology, data, and AI capabilities and limitations. Furthermore, they should grasp AI’s implications on corporate and operational strategies.

Empower executives to comprehend the potential benefits of AI for the enterprise, kickstart the development of an AI strategy, make informed resource allocation decisions, and foster seamless collaboration with an AI team dedicated to supporting valuable AI projects. They must be capable of establishing the direction for AI projects, allocating resources effectively, monitoring and tracking progress, and making necessary corrections to ensure successful project delivery.

Stakeholders should possess a fundamental grasp of AI project workflows and processes, as well as an understanding of roles and responsibilities within AI teams. Additionally, they should be proficient in managing AI teams effectively.

Technical stakeholders should have a technical understanding of machine learning and deep learning, along with a basic comprehension of other AI tools. Possess an understanding of the available tools, including open-source and third-party options, for constructing AI and data systems. Ability to implement AI teams’ workflow and processes. Should engage in ongoing education to remain abreast of evolving AI technology.

Build Communication Plan

When running a communications program to ensure alignment with key stakeholders regarding the significant impact of AI on your business, consider the following for each audience:

Employees: Provide regular updates and transparent communication about AI initiatives and their potential impact on job roles and responsibilities. Offer training and upskilling programs to help employees adapt to changes brought about by AI implementation. Encourage open dialogue and address any concerns or misconceptions about AI’s effects on job security and career development. Highlight the potential benefits of AI for employees, such as increased efficiency, productivity, and opportunities for innovation.

Customers: Communicate how AI will enhance products or services, improve customer experiences, and address their needs more effectively. Assure customers of data privacy and security measures in place when implementing AI-driven solutions. Provide educational materials or resources to help customers understand how AI technologies benefit them. Solicit feedback from customers to ensure that AI implementations align with their preferences and expectations.

Regulators and Government Agencies: Ensure compliance with relevant regulations and guidelines governing AI technologies and data usage. Engage in proactive communication with regulators to address any questions or concerns about AI implementations and their potential implications. Collaborate with industry groups and policymakers to advocate for policies that support responsible AI adoption and innovation. Demonstrate a commitment to ethical AI principles and practices, including transparency, fairness, and accountability.

Investors: Clearly articulate the strategic rationale behind AI investments and the potential impact on business growth and profitability. Showcase successful AI initiatives and their contribution to key performance metrics and financial outcomes. Address any concerns or risks related to AI implementation, such as regulatory compliance, ethical considerations, or potential disruptions. Provide updates on the progress of AI projects and milestones achieved to demonstrate accountability and transparency.

By tailoring communication strategies to each audience and addressing their specific concerns and interests, one can foster alignment and build trust in your organization’s approach to AI implementation.

Define ROI

AI projects should deliver real and tangible business value. When evaluating the return on investment (ROI) of an AI project, consider metrics that directly reflect the business impact. Some examples of ROI metrics that provide business value include:

- Cost Reduction: Measure the reduction in operational costs achieved through automation or efficiency gains enabled by AI.

- Revenue Increase: Track the increase in revenue resulting from improved customer engagement, personalized recommendations, or enhanced sales forecasting enabled by AI.

- Time: Quantify the time saved by employees due to automation of repetitive tasks or streamlining of processes with AI.

- Customer Satisfaction: Assess improvements in customer satisfaction scores or Net Promoter Score (NPS) due to enhanced AI-driven experiences.

- Productivity Enhancement: Measure the increase in productivity of employees or processes facilitated by AI tools or automation.

- Risk Mitigation: Evaluate the reduction in risk exposure or improved compliance achieved through AI-driven risk management solutions.

- Quality Improvement: Assess improvements in product or service quality achieved through AI-driven optimization or predictive maintenance.

- Market Share Growth: Monitor the expansion of market share resulting from AI-enabled competitive advantages or enhanced market penetration strategies.

- Innovation Impact: Track the number of new product or service offerings, patents, or innovations driven by AI technologies.

- Employee Retention and Satisfaction: Measure improvements in employee retention rates or job satisfaction resulting from AI-enabled enhancements to work processes or job roles.

By selecting ROI metrics that align with specific business objectives and directly demonstrate the value generated by AI projects, organizations can effectively evaluate the success and impact of their AI investments.

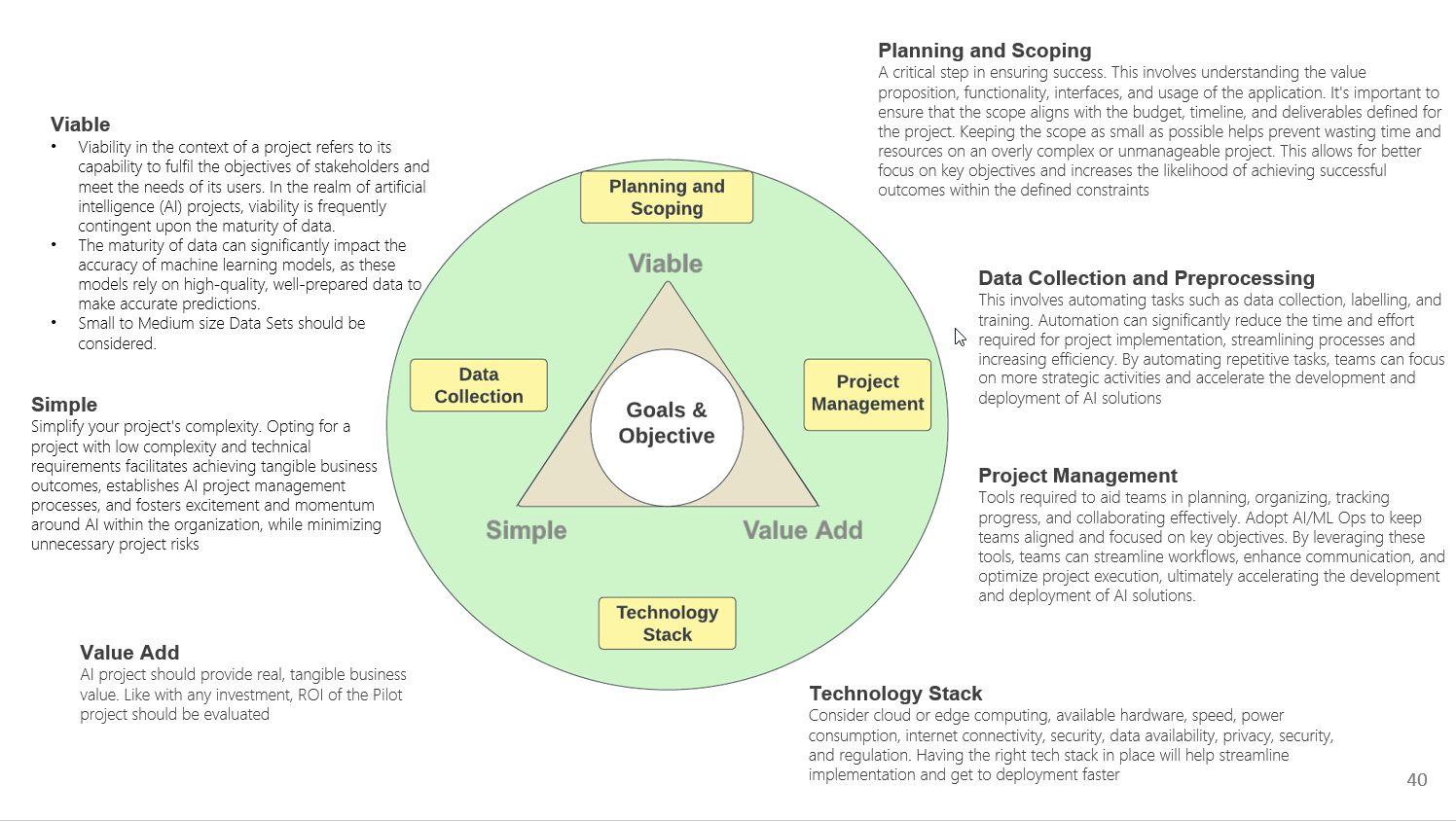

AI Pilot Project

-

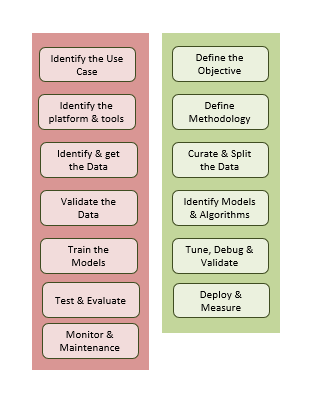

- Identify the Use Case: Identify use cases where the adoption of artificial intelligence can bring tangible benefits to your business. The projects should be sufficiently impactful to help the organization gain familiarity with AI and to persuade others within the organization to invest in additional AI initiatives based on the initial successes achieved..

- Define the Objectives: Clearly express what success means for your project. Define Business Metrics, Performance Metrics. Evaluate performance of the Model based on the Metrics.

- Identify Tools & Platform: If a tech stack has already been selected, it’s crucial to document it at this stage. Selection of specific AI tools should be done by the technical team, as they are best equipped to determine the most suitable tools based on project requirements and expertise.

- Define Methodology, AL/ML Ops: AI/MLOps approaches foster collaboration between AI/ML teams, application developers, and IT operations to accelerate the creation, training, deployment, and management of ML models and ML-powered applications. Automation—often in the form of continuous integration/continuous delivery (CI/CD) pipelines — makes rapid, incremental, and iterative change possible for faster model and application development life cycles. Define Repositories

- Define the Data Topology based on Business Objectives.

- Identify and get data with the help of domain and data experts.

- Data may be collected from: Existing In-house data

- Data Collection: Using automated tools & scripts to scrape data from websites and other sources

- Purchase Data: Buy data from external 3rd party data provider

- Generative Model: AI algorithm that can learn the underlying structure of a given dataset and can generate new data based off of what it learns. The generated data can then be used to augment the original dataset for the AI project.

Curate and Split the Data: Eliminate any irrelevant or redundant information from the data to make it suitable for use in the project. This step is crucial to ensure that the model can achieve the desired results effectively. Split the data into (a) Training Data Set (b) Validation Data Set (c) Test Data Set.

Validate the Data: Utilize your training dataset to perform various tasks such as plotting data for visualization, conducting sanity checks to ensure data quality and consistency, and engineering new features to enhance model performance. During the exploration phase, raw data is usually analyzed using a combination of manual workflows and automated data-exploration techniques. This process involves visually exploring datasets to identify similarities, patterns, and outliers, as well as to discern relationships between different variables.

Train the Model: Identify and leverage patterns in the data. Split the training data and use a portion to train candidate models, where patterns found in the data are translated into models. Evaluate the performance of these models on the remaining training data.

Tune, Debug and Validate: This process involves selecting and tuning parameters and hyperparameters to ensure that the models are functioning as expected and producing the desired results. Iterative validation should be an integral part of the implementation process. Validate the models by testing them on real-world data to ensure accuracy and reliability. Continuously monitor model performance and make real-time modifications to enhance their effectiveness. Verify that all components of the project are appropriately interconnected, including the data pipelines. This ensures seamless flow of data and processes throughout the project.

Test and Evaluate

Comprehensive testing is essential to ensure that the models are operating efficiently, meeting the expected accuracy levels, and running within the specified time limits. This could look like utilizing one or more of the following specific testing methods:

- Model Performance Testing: precision, recall, F-score, and confusion matrix (False and True positives, False and True negatives) against predetermined accuracy

- Predictive Model Testing: Evaluate the generalization performance of a predictive model by testing it on an independent dataset, which the model has not encountered during training. This process involves assessing how accurately the model can predict the outcomes of unseen data, with the goal of determining its effectiveness in real-world scenarios.

- Metamorphic Testing: It involves testing the system with mutated input data, and then evaluating the quality of the output to ensure it remains consistent with the expected output. This approach helps identify any hidden flaws or errors in the system that might not be detected by traditional testing methods.

- Dual coding or algorithm ensemble Testing: Two or more algorithms are applied to the same dataset. The outputs of these algorithms are then compared and analyzed to determine which one is most accurate and/or efficient. This type of testing is crucial for ensuring the accuracy of an AI system and identifying any potential issues or discrepancies within the system.

Deploy & Measure

Deploying an AI project is a critical step in AI project management, as it involves making the AI software/model accessible to the target audience, whether internal users or customers. The process for deploying varies, depending on factors such as the type of AI tool, the deployment environment, and the required infrastructure. :

- Security: Implement security measures to secure data, protect sensitive data and prevent unauthorized access to the AI system.

- Performance: Verify that the deployed AI system meets performance requirements in terms of response time, throughput, and resource utilization.

- Scalability: Ensure that the AI system can handle varying levels of workload and user demand as it scales to larger audiences or datasets. Consider the number of users, the amount of data, and the number of models that will be running simultaneously.

Monitor & Maintenance

AI systems require ongoing monitoring and maintenance to ensure that they continue to deliver the desired value to users. The project manager should use the initial project deliverables, objectives and KPIs to measure long-term performance.

- Accuracy: Evaluate the accuracy of the models by comparing their performance against project benchmarks. Expect accuracy levels to evolve over time as the models are tested against more real data in a production environment. Regularly monitor and analyse model performance to ensure that it continues to meet desired standards and adjust as necessary to maintain optimal accuracy.

- Data Pipeline: Monitor data pipelines closely, identifying and addressing bottlenecks or inefficiencies in data collection, transformation, and model training. Track metrics like processing times, resource use, and system throughput to ensure smooth operation. Regular monitoring enables timely issue resolution, maintaining AI system performance.

- Security: Monitor the system for any potential security vulnerabilities, ensure that any data that is being processed is encrypted, and that any data that is being stored is secure.

- Changing requirements: Make any adjustments to the AI system based on newly available data, changes in business needs, or advancements in the industry.

Additional Reference

AI Development key touch points

AI/ML development involves experimentation, which implies multiple iterations with processes; The task included but are not limited to:

Learn the business domain, including relevant history, such as whether the organization or business unit has attempted similar projects in the past, from which you can learn. Formulate initial hypotheses (IH) to test and begin learning the data. Assess impact and security requirements of using data of third-party services. Prepare and maintain high-quality data for training ML models. Develop data sets for testing, training, and production purposes. Setup the environment for executing models and workflows, including fast hardware and parallel processing. Determine the availability of APIs that address your requirements. Selecting frameworks and libraries to accelerate development based upon your requirements for ease of use, development speed, size and speed of solution and level of abstraction and control. Determining the methods, techniques, and workflow you intend to follow for the model scoring. Explore the data to learn about the relationships between variables, and subsequently select key variables and the models you are likely to use. Track models in production to detect performance degradation. Perform ongoing experimentation of new data sources, ML algorithms, and hyperparameters, and then tracking these experiments. Maintain the veracity of models by continuously retraining them on fresh data.

Avoid training-serving skews that are due to inconsistencies in data and in runtime dependencies between training environments and serving environments. Handle concerns about model fairness and adversarial attacks (making small, imperceptible changes to input data to deceive the machine learning model). Determine succeeded or failed, based on the criteria developed in the Discovery phase through observation. Identifying key findings, quantifying the business value, and developing a narrative to summarize the findings and conveying to stakeholders/sponsors. Deliver final reports, briefings, code, and technical documents.

AI Architecture and Governance

AI Governance

To prevent existential risks and to have human oversight of AI application AI Governance is crucial. Ensuring protection, fairness, ethics, responsibility, and transparency is absolute essential. Lawfully collect the data that is required. Be transparent as to why data is being collected, what it will be used for and who will have access to it. Following legal and regulatory requirements related to data privacy, ethics, and fairness. Delete data when its “agreed purpose” has been fulfilled. Remove unnecessary personal data. Implement a process to remove all the data being stored about a specific individual whenever required. Anonymize data, where possible, to remove personal identifiers. Encrypt personal data. Limit access to data and ensure data usage is only for the purposes agreed upon. Incorporate security governance and permissions to store and process data. Secure data storage environments and monitor data access. Incorporate an audit trail of individuals who view or modify data. Implement a process to provide an individual or governing bodies with a copy of all the data being held about him or her. Implement a process to interpret the output of a system and act on the outcome is essential. One needs to be cognizant of its potential impact on society and individuals, to avoid biases and discrimination, ensuring fairness in outcomes and decisions. Allow users to understand how decisions are made to foster trust.

Additional Reference

AI Development key touch points

AI/ML development involves experimentation, which implies multiple iterations with processes; Learn the business domain, including relevant history, such as whether the organization or business unit has attempted similar projects in the past, from which you can learn. Formulate initial hypotheses (IH) to test and begin learning the data. Assess impact and security requirements of using data of third-party services. Prepare and maintain high-quality data for training ML models. Develop data sets for testing, training, and production purposes. Setup the environment for executing models and workflows, including fast hardware and parallel processing. Determine the availability of APIs that address your requirements. Selecting frameworks and libraries to accelerate development based upon your requirements for ease of use, development speed, size and speed of solution and level of abstraction and control. Determining the methods, techniques, and workflow you intend to follow for the model scoring. Explore the data to learn about the relationships between variables, and subsequently select key variables and the models you are likely to use. Track models in production to detect performance degradation. Perform ongoing experimentation of new data sources, ML algorithms, and hyperparameters, and then tracking these experiments. Maintain the veracity of models by continuously retraining them on fresh data. Avoid training-serving skews that are due to inconsistencies in data and in runtime dependencies between training environments and serving environments. Handle concerns about model fairness and adversarial attacks (making small, imperceptible changes to input data to deceive the machine learning model). Determine succeeded or failed, based on the criteria developed in the Discovery phase through observation. Identifying key findings, quantifying the business value, and developing a narrative to summarize the findings and conveying to stakeholders/sponsors. Deliver final reports, briefings, code, and technical documents.

AI Deployment key touch points

CI/CD: Development and Release

After verifying that the model works technically, it is imperative to testing the model’s effectiveness in production by gradually serving it alongside the existing model and running online experiments. A subset of users is redirected to the new model in stages, testing new functionality in production with live data, as per the online experiment in place. The outcome of the experiments is the final criterion that decides whether the model can be fully released and can replace the previous version. A/B testing and multi-armed bandit (MAB) testing are common online experimentation techniques that can used to quantify the impact of the new model. There are 2 Deployment patterns, namely (a) Deploy Model (b) Deploy Code. Deploy code is advantageous where access to production data is restricted, allowing the model to be trained on production data in production environment.

Please contact us today!

Mavengigs

16192 Coastal Highway, Lewes, DE 19958

Contact Us

Ph: (310) 694-4750 sales@mavengigs.com

Los Angeles

San Francisco

Las Vegas

Chicago

New Delhi